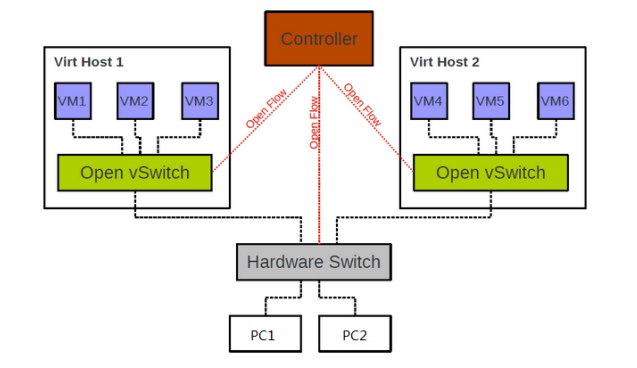

OVS Network Architecture

Open vSwitch is an open-source virtual switch that supports the OpenFlow protocol. It can be managed remotely by controllers through the OpenFlow protocol to achieve networking and interconnection for connected virtual machines or devices. Its main functions are:

- Transmitting traffic between virtual machines

- Enabling communication between virtual machines and external networks

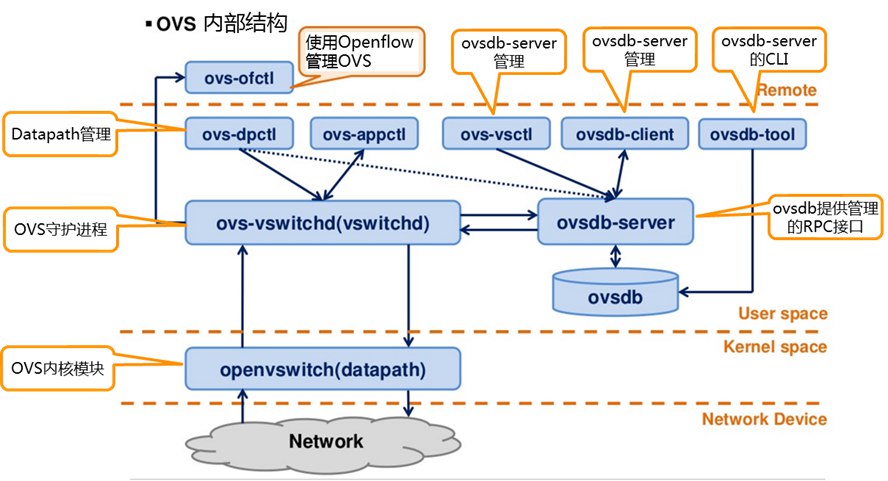

OVS Internal Structure

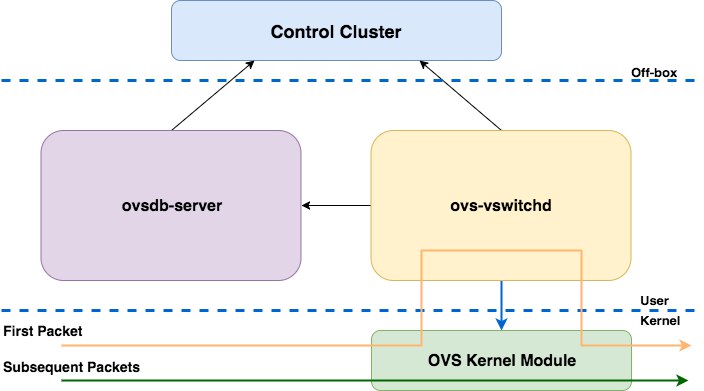

OVS has three core components:

ovs-vswitchd: The OVS daemon process, which is the core component implementing switching functionality. Working together with the Linux kernel compatibility module, it implements flow-based switching. It communicates with:

- Upper-layer controllers using the OpenFlow protocol

- ovsdb-server using the OVSDB protocol

- Kernel module via netlink It supports multiple independent datapaths (bridges) and implements features like bonding and VLAN through flow table modifications.

ovsdb-server: A lightweight database server that stores all OVS configuration information, including ports, switching content, VLANs, etc. ovs-vswitchd operates based on the configuration information in this database. It exchanges information with the manager and ovs-vswitchd using OVSDB (JSON-RPC).

OVS kernel module (datapath + flowtable): The kernel module responsible for data processing. It matches packets received from input ports against the flow table and executes matching actions. It handles packet switching and tunneling, caches flows, and forwards packets based on cached rules or sends them to userspace for processing. A datapath can correspond to multiple vports, similar to physical switch ports. Each OVS bridge has a corresponding kernel-space datapath that controls data flow based on the flow table.

For easier configuration and management, the following tools are provided:

- ovs-dpctl: Configures the switch kernel module and controls forwarding rules

- ovs-vsctl: Queries or updates ovs-vswitchd configuration by operating on ovsdb-server

- ovs-appctl: Sends commands to OVS daemons

- ovsdbmonitor: GUI tool for displaying ovsdb-server data

- ovs-controller: A simple OpenFlow controller

- ovs-ofctl: Queries and controls flow table contents when OVS operates as an OpenFlow switch

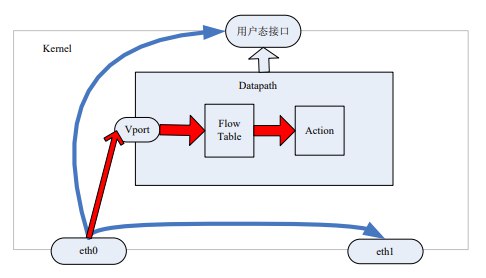

From the architecture, we can see that in userspace, two processes are running: ovs-vswitchd and ovsdb-server. Simply put, ovsdb-server persists ovs-vswitchd’s configuration to a database, typically located at /etc/openvswitch/conf.db. ovs-vswitchd is a daemon process that reads configuration information from ovsdb-server and synchronizes any configuration updates back to ovsdb-server. They communicate via Unix domain sockets. The kernel space mainly consists of the datapath and flow table. Communication between userspace and kernel space is implemented through the Netlink protocol.

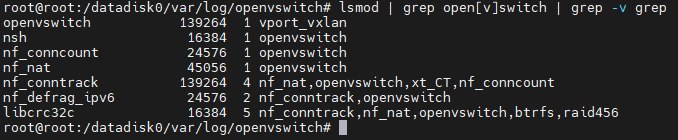

Viewing loaded kernel modules

Viewing loaded kernel modules

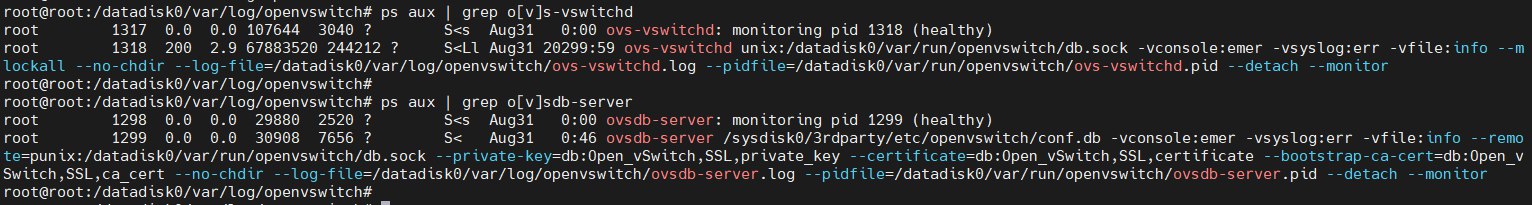

Looking at the processes, we can see:

**ovs-vswitchd** runs with the command `ovs-vswitchd unix:/datadisk0/var/run/openvswitch/db.sock`, specifying the Unix domain socket path for communication with **ovsdb-server**. It listens on a local db.sock file and sets log levels for three VLOG facilities, uses mlockall to lock memory in physical RAM, and specifies log file and PID file paths.

Looking at the processes, we can see:

**ovs-vswitchd** runs with the command `ovs-vswitchd unix:/datadisk0/var/run/openvswitch/db.sock`, specifying the Unix domain socket path for communication with **ovsdb-server**. It listens on a local db.sock file and sets log levels for three VLOG facilities, uses mlockall to lock memory in physical RAM, and specifies log file and PID file paths.ovsdb-server runs with ovsdb-server /sysdisk0/3rdparty/etc/openvswitch/conf.db, specifying the configuration file path to store settings in conf.db. The remote parameter specifies the connection method to the ovsdb-server database using punix:/db.sock for inter-process socket communication. ovs-vswitchd reads configuration through this db.sock. Additional options include log file path, PID file path, and various certificates and keys.

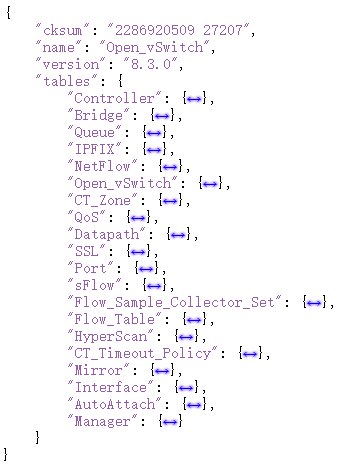

conf.db is in JSON format and can be viewed using cat with JSON tools or the ovsdb-client dump command to print the database structure.

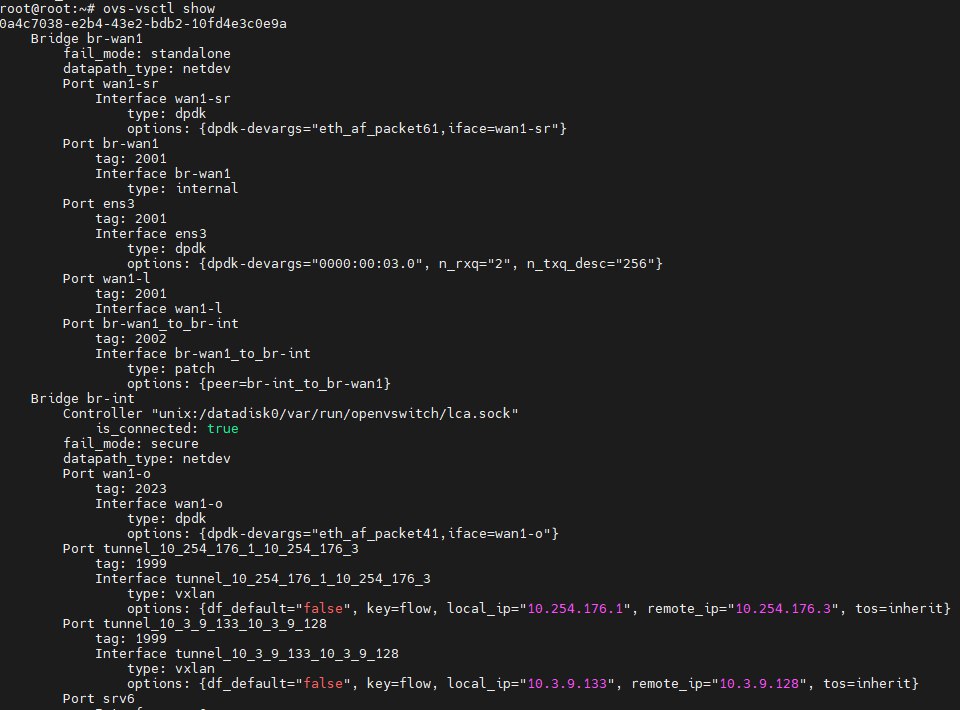

All bridges and network interfaces created through ovs-vsctl are stored in the database, and ovs-vswitchd creates the actual bridges and interfaces based on this configuration.

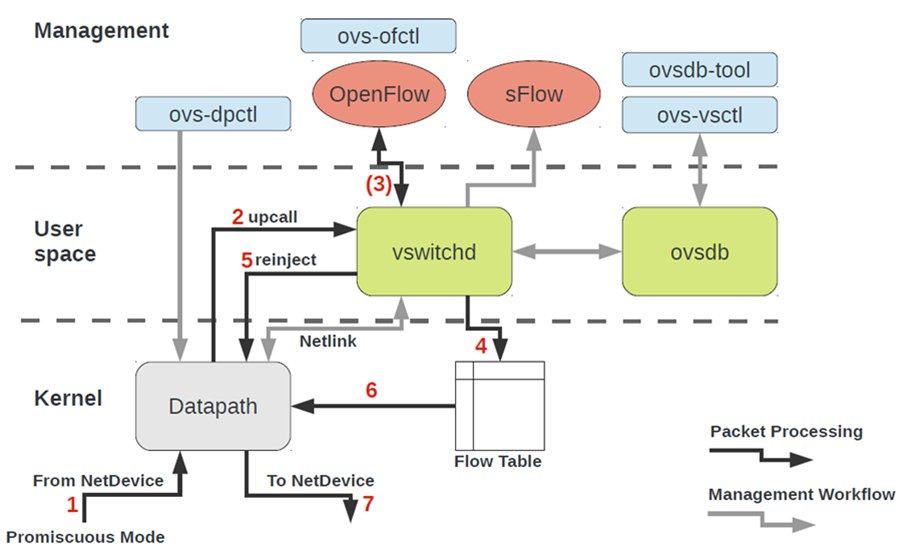

Data Flow

In normal Linux networking, packets follow the blue arrows: when NIC eth0 receives packets, it determines their destination - either to userspace for local packets or to another NIC like eth1 for forwarded packets based on routing (layer 2 switching or layer 3 routing).

With OVS, after creating a bridge (ovs-vsctl add-br br0) and binding a NIC (ovs-vsctl add-port br0 eth0), the data flow follows the red arrows:

- Packets from eth0 enter OVS through the Vport

- The flow table in the kernel matches packet keys and executes corresponding actions if matched

- If no match is found, an upcall sends the packet to userspace via Netlink for ovs-vswitchd to query ovsdb

- If still no match, communication with the controller via OpenFlow occurs to receive flow entries

- Matching flow entries are sent to the kernel flow table via Netlink

- Packets are reinjected to the kernel via Netlink

- The kernel matches flow entries and executes corresponding actions

In development, code modifications typically occur in:

- The ovs_dp_process_received_packet() function in datapath.c, which all packets must pass through

- Custom flow tables, where you can design your own actions to achieve desired functionality

Additional Network Concepts

- Bridge: A virtual network device functioning as an Ethernet switch. Multiple bridges can exist in a virtual host. In OVS, each virtual switch (vswitch) can be considered a bridge since OVS implements communication using the bridge module.

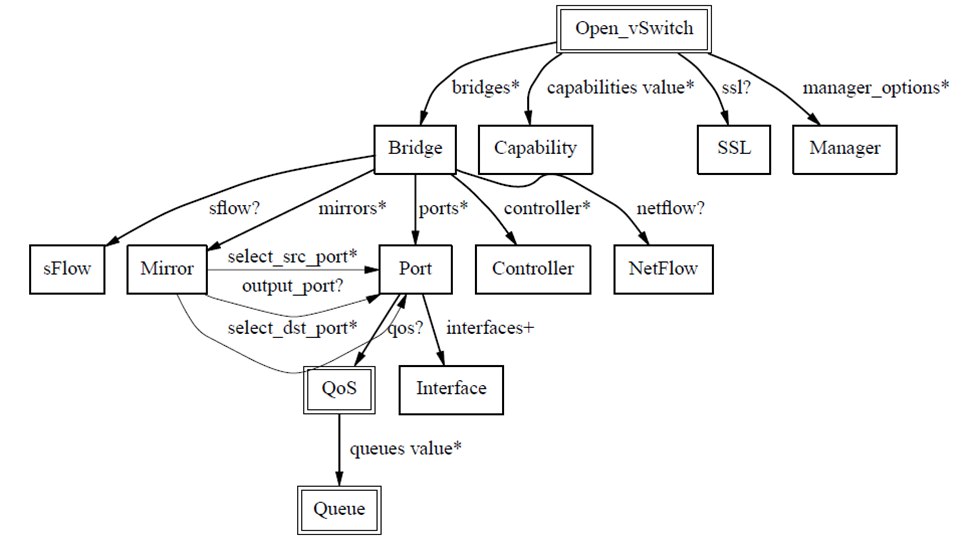

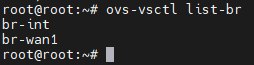

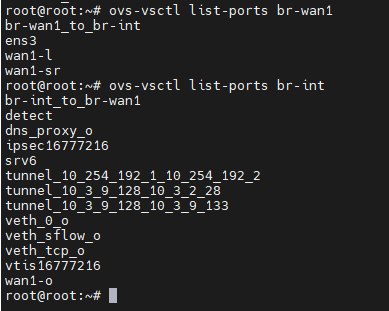

Datapath: In OVS, datapath is responsible for executing data switching - it matches incoming packets from receive ports against the flow table and executes matching actions. A datapath can be thought of as a switch or bridge. As shown below, each datapath item contains several Port entries, which are the ports on the virtual switch (datapath). Datapath types are divided into netdev and system. Using ovs-vsctl show displays detailed information - for example, the port tunnel_10_3_9_133_10_3_9_128 under br-int has established a vxlan tunnel with the remote port.

Port: Similar to physical switch ports, each Port belongs to a Bridge (datapath).

Interface: A network interface device connected to a Port. Normally, Port and Interface have a one-to-one relationship, except when Port is configured in bond mode, where it becomes one-to-many.

Controller: OpenFlow controller. OVS can be managed by one or multiple OpenFlow controllers simultaneously.

Flow table: Each datapath is associated with a “flow table”. When datapath receives data, OVS looks for matching flows in the flow table and executes corresponding actions, such as forwarding data to another port. OpenFlow-compatible switches should include one or more flow tables, with entries containing: packet header information, instructions to execute upon matching, and statistics.

Flow: In the OpenFlow whitepaper, a Flow is defined as specific network traffic. For example, a TCP connection is a Flow, or packets from a particular IP address can be considered a Flow.

Patch: Different bridges in OVS can be connected via patch ports, similar to Linux veth interfaces. Patch ports only exist on bridges, not in datapath. If the output port is a patch port, it’s equivalent to its peer device receiving the packet and looking up OpenFlow flow tables in the peer device’s bridge for forwarding. Bridges with different datapath types cannot be connected via patch ports.

Tun/Tap: TUN/TAP devices are virtual network cards implemented in the Linux kernel. While physical NICs send/receive packets over physical lines, TUN/TAP devices send/receive Ethernet frames or IP packets from userspace applications. TAP is equivalent to Ethernet devices, operating on L2 data link layer frames; TUN simulates L3 network layer devices, operating on network layer IP packets.

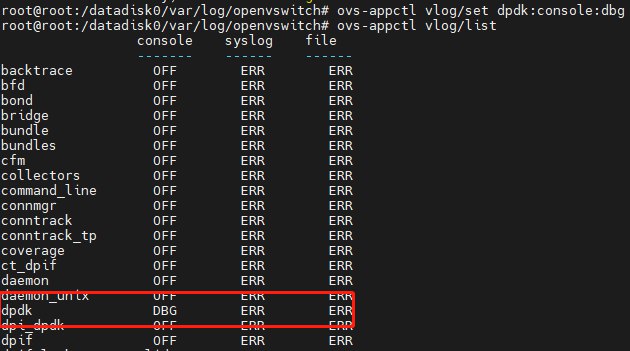

VLOG

Both OVS processes (ovsdb-server and ovs-vswitchd) use built-in Vlog to control their log content. Properly setting log modules and levels helps with troubleshooting and learning.

Open vSwitch has a built-in logging mechanism called VLOG. The VLOG tools allow you to enable and customize logging in various network switching components. Log information generated by VLOG can be sent to a console, syslog, and a separate log file for easy viewing. OVS logs can be dynamically configured at runtime using the ovs-appctl command line tool.

OVS logs are stored in /datadisk0/var/log/openvswitch on the device.

You can use ovs-appctl to view or modify the target process’s log. The default target is ovs-vswitchd, and you can specify a specific target using the -t parameter:

1$ ovs-appctl vlog/list # equivalent to ovs-appctl -t ovs-vswitchd vlog/list

2$ ovs-appctl -t ovsdb-server vlog/list

1$ ovs-appctl vlog/list

2 console syslog file

3 ------- ------ ------

4backtrace OFF ERR ERR

5bfd OFF ERR ERR

6bond OFF ERR ERR

7bridge OFF ERR ERR

8bundle OFF ERR ERR

9bundles OFF ERR ERR

10cfm OFF ERR ERR

11collectors OFF ERR ERR

12command_line OFF ERR ERR

13connmgr OFF ERR ERR

14conntrack OFF ERR ERR

15conntrack_tp OFF ERR ERR

16coverage OFF ERR ERR

17ct_dpif OFF ERR ERR

18daemon OFF ERR ERR

19daemon_unix OFF ERR ERR

20dpdk OFF ERR ERR

21...

Output results show debug levels for each module across three facilities (console, syslog, file). The syntax for customizing VLOG is:

1$ ovs-appctl vlog/set[module][:facility[:level]]

Where module is the module name (like backtrace, bfd, dpdk), facility is the log destination (must be console, syslog, or file), and level is the log detail level (must be emer, err, warn, info, or dbg).

1$ sudo ovs-appctl vlog/set dpdk:console:dbg # Change dpdk module's console log level to DBG

2$ sudo ovs-appctl vlog/set ANY:console:dbg # Change console log level to DBG for all modules

3$ sudo ovs-appctl vlog/set ANY:any:dbg # Change all facilities' log level to DBG for all modules

The dpdk module’s console tool has had its log level changed to DBG, while the other two facilities (syslog and file) remain unchanged.